.avif)

Scientific discovery has always relied on good, clean data. Bad data leads to irreproducible outcomes, problematic solutions, and, ultimately, the need to revisit and acquire better data. Now that AI-driven predictive models are becoming more commonplace in early drug discovery workflows, the importance of data that is accurate and consistent across entire research workflows has never been greater.

Messy data remains a persistent challenge in modern drug discovery, causing scientists to spend considerable time resolving inconsistencies in entity naming, catching mismatched database formats, and correcting errors. Machines struggle to handle the nuances of complex datasets because they lack the contextual understanding needed to interpret ambiguity and inconsistencies. Human scientists are tasked frequently with identifying then rectifying issues hidden in data that technology is not equipped to address sufficiently.

For drug discovery teams, messy data creates a ripple effect, leading researchers to design experiments and build models based on flawed assumptions or incomplete information, ultimately wasting valuable resources. This is where the complementary processes of data cleaning and data harmonization play a pivotal role in optimizing workflows, each addressing distinct but interconnected challenges.

- Data cleaning: The process of having experts identify and correct errors, fill in missing values, and remove irrelevant information to ensure accuracy and reliability within individual datasets

- Data harmonization: The human integration of information from multiple sources, standardizing and unifying it into a cohesive framework to enable seamless analysis, comparability, and collaboration

The key to successful cleaning and harmonization lies in human curation.

{{lipid-report="/new-components"}}

Data cleaning, the critical first step

Before scientists can harmonize data, they must clean it. By eliminating the errors that arise from data entry, miscalculations, sensor malfunctions, or system glitches, scientists can build the data foundation required for harmonization. Teams that rely on properly cleaned data gain:

- Improved accuracy, ensuring that the findings drawn from this data are reliable and reproducible, leading to high-quality drug candidates.

- Increased efficiency, reducing the time spent on troubleshooting and re-running analyses due to errors.

- Enhanced collaboration, facilitating better teamwork between teams, institutions, and industries by eliminating discrepancies between datasets.

- Refined predictive power, using the forming clean data to build the foundation for predictive models that can accurately forecast drug-target interactions, disease relationships, and more.

Harmonization without proper data cleaning is like building on quicksand—fragile and unsustainable. When scientists clean data, they ensure that only the most relevant, reliable information is incorporated into downstream processes, providing an accurate view of the research landscape and a sturdy data foundation.

A structured approach for successful data harmonization

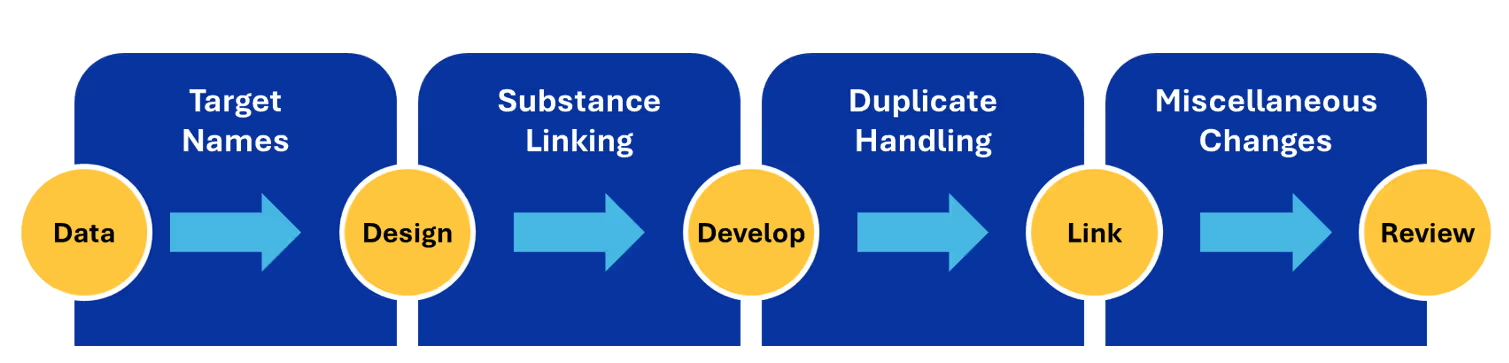

Data harmonization (Figure 1) begins with establishing authority constructs and naming standards. For example, this painstaking work ensures that entities like proteins are uniformly named and categorized across all sources and datasets for drug development purposes to enable target identification.

The next phase in harmonization is substance linking, in which scientists identify and connect references to the same chemical substance across disparate datasets or databases. This process unifies different substance representations, synonyms, and identifiers into a single, consistent entity. This effort is essential to pharmacology and drug discovery, where varying conventions across sources often result in the same compound being described in different ways.

Further along in the harmonization process, data scientists identify and manage exact and related documents, preventing data duplication and ensuring that only the most relevant information is retained. The final step focuses on ensuring consistency of data definitions across datasets, which is essential for producing a cohesive foundation built with data from multiple sources.

Drug discovery teams can navigate complex data landscapes confidently by implementing a harmonization workflow powered by human curation, resulting in reliable datasets primed for advanced analytics and predictive modeling. This systematic approach minimizes errors and ensures that all subsequent research is built on a solid, clean data foundation.

[BREAKER HERE WITH CTA TO RELATED CAS DATA CONTENT]

Gain new perspectives for faster progress directly to your inbox.